Lagrangian and Hamiltonian mechanics#

Newton’s laws and conservation of energy are two approaches to solving for the equations of motion of an object. We can make Newtonian mechanics more elegant by extending them to fields and potentials. But ultimately, Newtonian mechanics is still cumbersome to use. Here is an alternate, more beautiful approach - Lagrangian mechanics.

Lagrangian Mechanics#

The Lagrangian is the difference of an object’s kinetic and potential energies, and is denoted by:

Note that the dots are used for the time derivatives - that is, \(\dot x = \frac{dx}{dt}\). The action is a fundamental quantity of all physical systems and is given by the time integral of the Lagrangian:

The principle of stationary action states that for any given system, the action is stationary. What does stationary mean? Recall the idea of stationary points in calculus - which include minima and maxima. For the action to be stationary, that means the Lagrangian must be a stationary function, which are analogous to stationary points, just for the action, which is a function of functions (what we call a functional, which we’ll go more in-depth with later).

But what form does that Lagrangian have to take to obey the principle of stationary action? The short answer is that it must obey the following equation, known as the Euler-Lagrange equation:

where again, \(\dot x = \dfrac{dx}{dt}\) is the velocity. This is one of the most fundamental and profound equations of physics, and works for any particle’s Lagrangian (particle, remember, can be a big object like a planet or star, it is a generic term in physics). Once you write down the Euler-Lagrange equation, you just need to take the derivatives of the Lagrangian and substitute to get the equations of motion (the differential equations you use to solve for the trajectory of the particle). Applying it, at least conceptually, is fairly simple.

But to gain a deeper understanding of why this equation works, we must first dive into the theory of functionals and variational calculus. If this section is too math-heavy, feel free to skip this section - it’s not required for applying the Euler-Lagrange equation. But for those that want the step-by-step derivation - let’s dive in!

Functionals#

A functional is a function that takes in other functions as input. In contrast to a function \(f\) which takes in a real number \(x\) and outputs \(f(x)\), a functional \(\mathcal{L}\) takes in a function \(y(x)\) and outputs \(\mathcal{L}(y(x), y'(x), x)\).

The derivative appearing in \(\mathcal{L}(y, y', x)\) and the mention of the word “calculus” suggests that functionals are based in differential operators, such as derivatives and integrals. Indeed, this is the case - a great number of functionals are in fact integrals.

Consider, for instance, a functional that appears - under a different name - in a first introduction to calculus. This is the functional expression for the arc length:

While an introductory treatment of calculus may simply give this formula with the provided function \(y\) and its derivative \(y'\), the calculus of variations would consider this formula a functional of an arbitrary function \(f\) in the form:

The calculus of variations is concerned with optimizing functionals to find their stationary points. In many cases, we want to obtain the minimum or maximum of a funtional, but remember that stationary points are more general and can include things like saddle points and other points of inflection (i.e. points around which the second derivative changes sign).

In our case, we want to figure out which path \(y(x)\) is the shortest distance between points \(x = a\) and \(x = b\). Translated to mathematical terms, we can say that we want to optimize \(S(y, y', x)\) for the function \(y(x)\) that minimizes \(S\). But how do we do so? The answer requires a fair bit of explaining, so this is a section to be read through slowly.

The general functional optimization problem#

Consider a general functional \(S(f, f', q)\) where the functional \(S\) is a function of \(f(q)\), \(f'(q)\), and \(q\). Here, \(f(q)\) is a parametric function of one parameter \(q\) - we will explore specific cases of \(f(q)\) later (hint: one of these will be the position function \(x(t)\) which is a parametric function where the parameter is \(t\)). Our functional \(S(f, f', q)\) is given by:

All of this is certainly very abstract, so let us examine what it all means. \(S\) is a functional, meaning that it takes some function \(f(q)\) and outputs a number. The precise thing it does, in this case, is to integrate any composite function of \(f\), its derivative \(f'(q)\), and its input \(q\), between two points in the domain of \(f\). For notational clarity, we call this composite function of \(f\), \(f'\), and \(q\) as \(\mathcal{L}(f, f', q)\). As we are taking the integral of the composite function, this results in a number, since definite integrals return a number. So to sum it all up, \(S\) is a functional, that, given any function \(f(q)\) - whatever the function may be - returns the definite integral of any possible composite function of \(f\), its derivative \(f'\), and its input \(q\).

We want to find the function \(f\) that minimizes or maximizes \(S\). This means we want to find a function for which \(S\) does not change with respect to \(f\) (similar to how the derivative is zero at a critical point in normal calculus). To find this optimal function, let us vary \(S\) by adding a function \(\eta(q)\) multiplied by a tiny number \(\varepsilon\) to \(f\) between \(q_1\) and \(q_2\) - this represents adding a tiny shift, also called a variation, to \(S\). Our particular shift is such that \(\eta(q_1) = \eta(q_2) = 0\), meaning that \(\eta(q)\) vanishes at the endpoints, since we want this variation to only be between \(q_1\) and \(q_2\) (and nowhere outside of that range). We then have:

Our next step is to find the amount of change \(\delta S\) between \(S(f, f', q)\) and \(S(f + \varepsilon \eta, f' + \varepsilon \eta', q)\). As a first step, we want to compute \(\mathcal{L}(f + \varepsilon \eta, f' + \varepsilon \eta', q)\), as that will allow us to compute \(S(f + \varepsilon \eta, f' + \varepsilon \eta', q)\), which we need in order to calculate \(\delta S\).

Note

We use \(\delta S\) instead of \(dS\) or \(\partial S\) as (1) \(\delta S\) is specifically for functionals and (2) the latter two symbols already have (multiple) reserved uses and we don’t want to muddle up the notation and make the meanings unclear.

Recall how, in single-variable calculus, we can express a small shift \(y(x + h)\) in a function \(y(x)\) for some tiny number \(h\) by:

This idea can be generalized to multivariable calculus, where we can express a small shift in a function \(f(x + \sigma, y + \lambda)\) in a function \(f(x, y)\) by:

In a similar way, in the calculus of variations, we can write:

Now, by substitution, we can find \(S(f + \varepsilon \eta, f' + \varepsilon \eta', q)\):

We may find the change in \(S\), which we will call \(\delta S\), by subtracting \(S(f, f', q)\) from the left-hand side:

In the limit as \(\varepsilon \to 0\), we would expect that \(\delta S = 0\), as the function that maximizes (or minimizes) \(S\), again, is the function for which \(S\) does not change with respect to \(f\). In formal language, this is called the process of varying \(S\) by a variation \(\varepsilon\), and then demanding that \(\displaystyle \lim_{\varepsilon \to 0} \delta S = 0\). This is why this form of calculus is called the calculus of variations or variational calculus. By setting \(\delta S = 0\) we have:

We would, however, prefer some way to get rid of the added function \(\eta(q)\) to obtain an equation that doesn’t depend on \(\eta\). We can do this by explicitly performing the above integral. First, we split the sum into two parts for mathematical convenience for the following steps:

We now simplify the second term in the integral by performing integration by parts to evaluate the integral. Recall that the integration by parts formula is as follows:

If we let \(u = \dfrac{\partial \mathcal{L}}{\partial f'}\varepsilon\) and \(dv = \dfrac{d\eta}{dq}\), then \(v = \displaystyle \int \dfrac{d\eta}{dq} dq = \eta(q)\) and \(du = \dfrac{d}{dq} \left(\dfrac{\partial \mathcal{L}}{\partial f'}\right)\varepsilon\). By substituting these in (we keep the first term there and don’t evaluate, we only perform integration by parts on the second term) we have:

But recall from earlier that we defined \(\eta(q)\) such that \(\eta(q_2) = \eta(q_1) = 0\), meaning that the \(\dfrac{\partial \mathcal{L}}{\partial f'} \eta\,\varepsilon\bigg|_{q_1}^{q_2}\) term goes to zero. Therfore, we are only left with:

Where in the last term we re-joined the sum of the integrals (which will make the next steps much easier). We know that the integral quantity we derived in the last step must be equal to zero, given that \(\delta S = 0\) is our fundamental requirement for finding the stationary points (minima, maxima, etc.) of functionals. Therefore we have:

Where we factored the common terms out of the integral in the last step. But since our integral is zero, by the fundamental lemma of the calculus of variations, our integrand must be zero as well, and resultingly our quantity in the squared brackets must also be zero. That is:

The last result is the general form of the Euler-Lagrange equation for our functional \(S\). Since our functional is a very general functional, the Euler-Lagrange equation applies to a huge set of functionals - indeed, all functionals in the form \(S[f(q), f'(q), q]\) (it is customary to use squared brackets when writing out the functional in its full form, but for our short form \(S(f, f', q)\) it is permissible to simply use parentheses). Thus, it is an extremely crucial and useful equation, so let us write it down one more time:

Application of the Euler-Lagrange equation to arc length#

Now, let us return to our example of the arc length functional:

We want to find \(y(x)\) that minimizes this functional, and for this we can use the Euler-Lagrange equation. In this case, \(f = y(x)\), \(f' = y'\), and \(\mathcal{L} = \sqrt{1 + y'^2}\), so the Euler-Lagrange equation for this particular functional reads:

We may now compute the derivatives (which is much-simplified by the fact that \(\mathcal{L} = \sqrt{1 + y'^2}\) does not depend on \(y\), but we must be careful to remember that \(\dfrac{d}{dx} f(y') = f'(y')y''\) due to the chain rule):

Thus, substituting into the Euler-Lagrange equation, we have:

Note

The differentiation is indeed quite a bit tedious, and we could’ve used computer algebra systems to take the derivatives for us to speed up this process. We will cover computer algebra systems in depth in Chapter 2.

While this may look very complicated, remember that the quantity in the square brackets is zero:

This becomes a differential equation that is straightforward to integrate:

Where \(m, b\) are constants. This is simply an equation of a straight line! By applying the calculus of variations, we have therefore shown that the shortest path between two points \(a, b\) - in functional terms, the path that minimizes the arc length - is a straight line. It may seem to be an obvious result, but proving it required quite a bit of calculus!

Note that the one restriction we must place on this result is that we assume \(S = \displaystyle \int \sqrt{1 + y'^2}\, dx\) is the right equation for the arc length. For regular Euclidean space, this is always the right equation, and Euclidean space is what we’ll work with 99% of the time. But in higher dimensions, and especially in non-Euclidean geometries, the arc length equation is no longer the correct equation for the arc length. We must then use differential geometry to construct the right equation for the arc length. But that is a topic we will cover in Chapter 3.

The Euler-Lagrange equation in physics#

In physics, we consider a specific case of the Euler-Lagrange equation, where (as mentioned at the beginning) \(q = t\) is the time, \(f = x(t)\) is the position, and \(f' = \dot x = \dfrac{dx}{dt}\) is the velocity. Therefore, the Euler-Lagrange equation, in its common form used in physics (specifically, Lagrangian mechanics), becomes:

Again, the Euler-Lagrange equation can be used to solve for the equations of motion as long as the Lagrangian is known. Note that for a more general set of coordinate systems, where the system is not one-dimensional motion along the \(x\) axis, there is an Euler-Lagrange equation that applies to each coordinate, each of which takes the following form:

where \(q_i\) stands in for the particular coordinate, so \(q_i\) can be any one of \(x, y, z\) when working in Cartesian coordinates, or any one of \(r, \theta, \phi\) when working in spherical coordinates. And in the specific case when we are interested in solving for the motion of a system of objects, and not just of one individual object, it should be noted that the kinetic and potential energies are those of the system - that is, the sum of the kinetic and potential energies of every object in the system:

Note that the Euler-Lagrange equations apply primarily to closed systems, i.e. systems with no external force acting on them. If there is an external applied force on the system that does work \(W\), then the Euler-Lagrange equations become:

Applying Lagrangian mechanics#

Having examined the fundamental theory behind Lagrangian mechanics, we will now look at a few examples of increasing difficulty, to illustrate its usefulness and mathematical elegance.

Using Lagrangian mechanics to solve the harmonic oscillator#

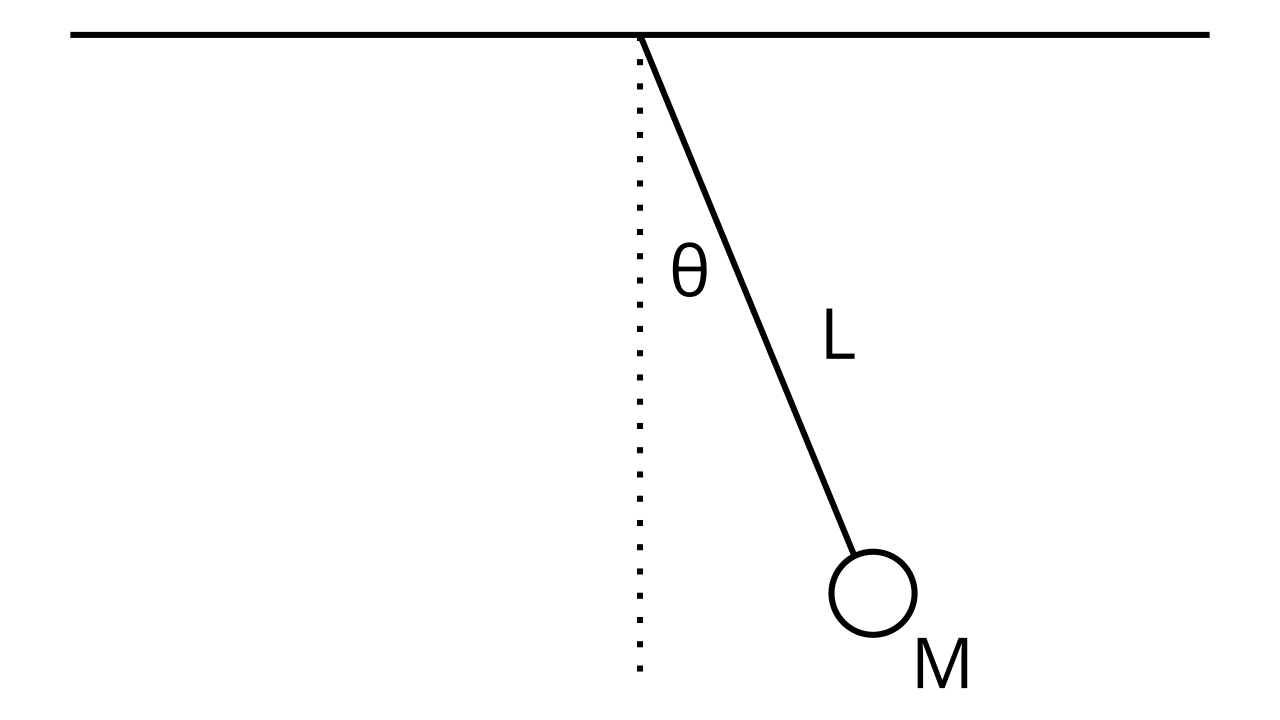

For the single pendulum problem, we first find the equations \(x(t)\) and \(y(t)\) given our coordinate system. Our coordinate system is based on the point \((0, 0)\) located at the point where the pendulum is attached to the ceiling. Using basic trigonometry, we find that:

Where \(y(t)\) is negative because the pendulum is at a negative height relative to our origin. Using our expressions for \(x(t)\) and \(y(t)\), we want to find the expression for the kinetic energy \(K\). We know that:

And that:

To do this, we solve for \(\frac{dx}{dt}\) and \(\frac{dy}{dt}\). This takes a bit of care, because we need to implicitly differentiate \(x(t)\) and \(y(t)\) with respect to \(t\), where:

Implicitly differentiating, we have:

Now, we plug our values for \(v_x\) and \(v_y\) into the kinetic energy equation, which gives us:

If we do a little simplification by factoring and remembering that \(\cos^2 \theta + \sin^2 \theta = 1\), we get:

Now we find the potential energy. Remember that close to Earth, the potential energy is determined by and only by the vertical distance between the origin (which is the reference height of zero) and the measured point. This means that:

The height in this case is negative (because it’s below the origin) and we’re only taking the vertical component of the height (hence \(\cos \theta\)) so:

Putting it all together, our Lagrangian is:

Here, our coordinates are determined in terms of the angle \(\theta\) only, so the Euler-Lagrange equations take the form:

If we plug this into the Euler-Lagrange equation, we get:

We can rewrite this as:

And cancel out the common factors to yield:

We have arrived at our answer. This is the differential equation of the simple pendulum. Note that while this equation is impossible to solve analytically directly, we can use the small-angle approximation of \(\sin \theta \approx \theta\) to get:

Of which the solution is:

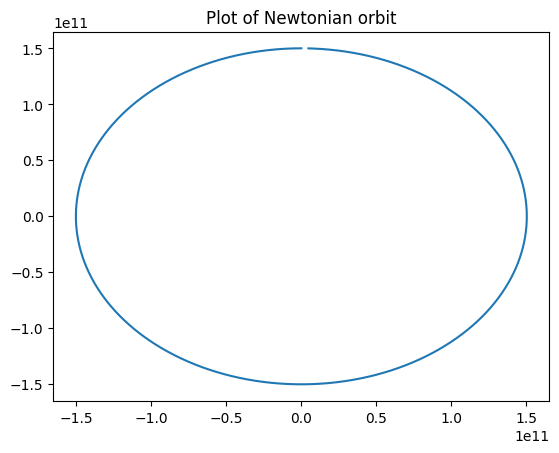

Using Lagrangian mechanics to solve the orbit equation#

We want to derive the orbit of Earth around the Sun. To do so, we again first derive the expressions for \(x(t)\) and \(y(t)\) in terms of the solar-earth system:

Differentiating both (and remembering to use the product rule), we find that:

Which we can use to find the kinetic energy (after lots of algebra and several passes at using the identity \(\sin^2 \theta + \cos^2 \theta = 1\)):

The potential energy is given by \(U = -\frac{GMm}{r}\), so the Lagrangian is:

Applying the Euler-Lagrange equations to each coordinate, \(r\) and \(\theta\), present in the Lagrangian, we have:

Solving both equations yields the equations of motion for the Earth, we find that:

These can be solved analytically, but for the sake of simplicity here they will be solved using a numerical differential equation solver:

As it can be seen, the orbit is a ellipse, and we have arrived at this result using Lagrangian mechanics!

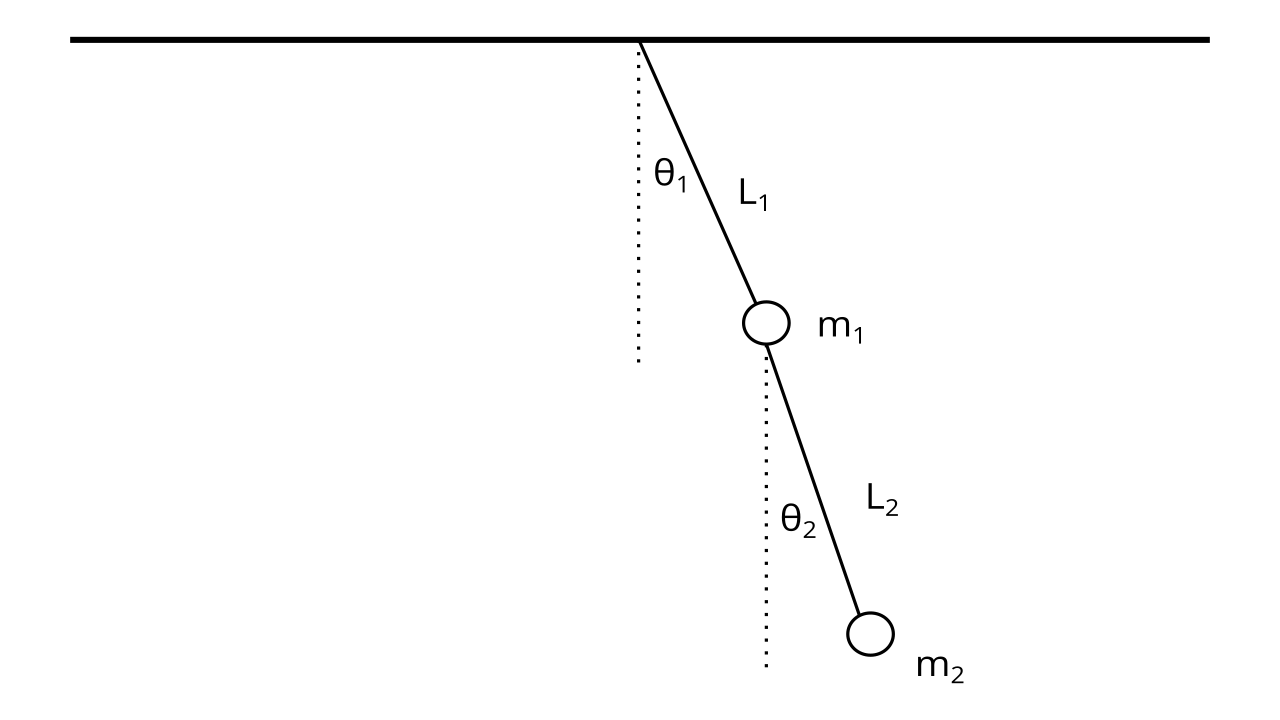

Using Lagrangians to solve the double pendulum problem#

We will now tackle a problem that would be very difficult to solve using Newton’s laws, but much easier with Lagrangian mechanics. Here we have a system as follows:

Here, the notable difference is that we have a system as opposed to a single object, and we need to find the kinetic and potential energies of the entire system. To do this, we divide the kinetic and potential energies into two parts:

Where \(K_1\) and \(K_2\) are respectively the kinetic energies of the first pendulum mass and second pendulum mass, and likewise with \(U_1\) and \(U_2\) and their potential energies.

We will first derive the kinetic energies, because they are harder :( As we know, we first setup a coordinate system where the point \((0, 0)\) is centered on the point the double pendulum is attached to the ceiling. Then, we write the position functions of the first pendulum:

We figure these out from basic trigonometry and the fact that \(y_1(t)\) is negative, as it is below the origin. We then take the derivatives to find the \(x\) and \(y\) components of the velocity:

Using this, we can find \(K_1\):

Using the trig identity \(\sin^2 \theta + \cos^2 \theta = 1\), this is trivial to simplify into:

Then, we write the position functions of the second pendulum:

Here, we add the \(x\) and \(y\) displacement of the second pendulum with the \(x\) and \(y\) displacement of the first to find the total displacement from the origin, because remember, we’re using the same coordinate system for both pendulums. If we sub in the values of \(x_1(t)\) and \(y_1(t)\), we have:

We compute their derivatives:

And then plug them into the kinetic energy formula to find:

Expanding that out, and using \(\sin^2 \theta + \cos^2 \theta = 1\) to simplify, we have:

Here, we can use the identity \(\cos x \cos y + \sin x \sin y = \cos (x - y)\) to simplify to:

Now, combining the two kinetic energies together, we end up with:

We use a similar approach for the potential energies - we add the potential energy of the first pendulum and the second to find the total system’s potential energy:

After factoring, we have:

Using \(\mathcal{L} = K - U\), we substitute into the two Euler-Lagrange equations (one for \(\theta_1\) and one for \(\theta_2\)):

With the Lagrangian:

To find the equations of motion, which are given by (source):

These equations are completely unsolvable analytically, but they can be solved numerically to yield the position of a double pendulum with time.

Langrangian to Newtonian mechanics#

Let’s see how we can recover Newton’s 2nd law from the Euler-Lagrange equation. Remember that the equation (in the case of one-dimenional motion along the \(x\) axis) is given by:

And remember that the Lagrangian is given by:

Applying the Euler-Lagrange equations to the general Lagrangian, we get:

Recalling that \(F = -\frac{dU}{dx}\) and \(\ddot x = a\), we have recovered Newton’s 2nd law!

We can even use Lagrangian mechanics on simple problems and check that it matches with Newtonian mechanics. Let’s do our freefall example from earlier. With \(K = \frac{1}{2} m \dot y^2\) and \(U = mgy\), we use the Euler-Lagrange equations to find:

Which we can simplify to:

Which reproduces the Newtonian result!

Hamiltonian mechanics#

Noether’s theorem#

Field Lagrangians#

Besides working with particles and their trajectories, we are also often interested in fields in physics, such as the electromagnetic or gravitational fields. To find the differential equations that describe these fields, we need an Euler-Lagrange equation for fields rather than particles.

Let us consider a generic field \(\varphi(\mathbf{r}, t)\). For reasons that will be elaborated in more detailed in the special and general relativity sections (we will give a rough outline for why in a note further down this section), it is conventional to group the space and time components in one vector \(\mathbf{X}\) which has four components, one of time and three of space, and where \(c\) is the speed of light:

It is also convention to group the time derivative and gradient operators together, which we call the four-gradient and denote \(\partial_\mathbf{X}\):

Note

Note for the advanced reader In special and general relativity, we call \(\mathbf{X}\) the four-position and we write it in tensor notation as \(x^\mu\), and we call \(\partial_\mathbf{X}\) the four-gradient and we write it similarly in tensor notation as \(\partial_\mu\). The reason we need these four-component vectors (and vector derivatives) is that in the theories of relativity, four-dimensional spacetime becomes of fundamental importance whereas space and time are reduced to perspective-dependent aspects of spacetime.

We will cover more on tensors in this chapter, but knowing tensors is not required for our (rough) treatment of Lagrangians in field theory. We will proceed with simply the four-position and four-gradient written in vector form.

With these new vectors we may write the field as \(\varphi(\mathbf{X})\). The action for the field is given by:

Where \(\mathcal{L}\) is our field Lagrangian, \(d^4 x = dVdt\) is an infinitesimal portion of space and time, and \(\Omega\) is the domain of all space and all times. We will not repeat the full derivation of the Euler-Lagrange equation, but the steps are very similar to what we have already seen with the single-object Lagrangian case. The result is the Euler-Lagrange equation for fields, which takes the form:

Lagrangians to the stars#

Note

This section is optional and discusses advanced physics, so by all means read on if interested, but otherwise feel free to skip this part!

The Lagrangian formulation of classical mechanics is so powerful, precisely because it relies on a differential equation that can be generalized. Beyond classical mechanics, the Lagrangian isn’t always necessarily \(\mathcal{L} = K - U\), but the Euler-Lagrange equations still hold true, and so does the principle of stationary action. Thus, a theory - including those that involve fields - can be written as a Lagrangian, as the Euler-Lagrange equations yield the equations of motion for each theory, on which the rest of the theory is built on! This is the reason behind learning Lagrangian mechanics.

We will end with one final thought - one of the most successful theories in all of physics, the Standard model of particle physics (which is a quantum field theory), is encapsulated in one compact Lagrangian:

And one of the most mathematically beautiful theories, in fact one we will see very soon, General Relativity (which is a classical field theory for gravity), is described in another compact Lagrangian:

Who knew that Lagrangians could take us deep into the hearts of atoms, and to the furthest stars…? :)